A plan for saving democratic capitalism from itself

Economic systems must balance efficiency with resilience in order to survive and flourish.

The marriage of democracy and capitalism has been arguably the greatest force for good in history, giving the creativity and enterprise of talented individuals the freedom to generate value in which all of us can share. History also shows, however, that this system’s continued survival cannot be guaranteed.

Machines that process inputs into outputs are judged and compared according to the efficiency with which they convert the one into the other. A car that travels farther on the same amount of fuel than another car is, other factors being equal (road conditions, for instance, or weather), more efficient and therefore better by that measure than the other car. If we assume that the economy is a machine, then the same cause-and-effect sequence must apply. Greater efficiency drives growth.

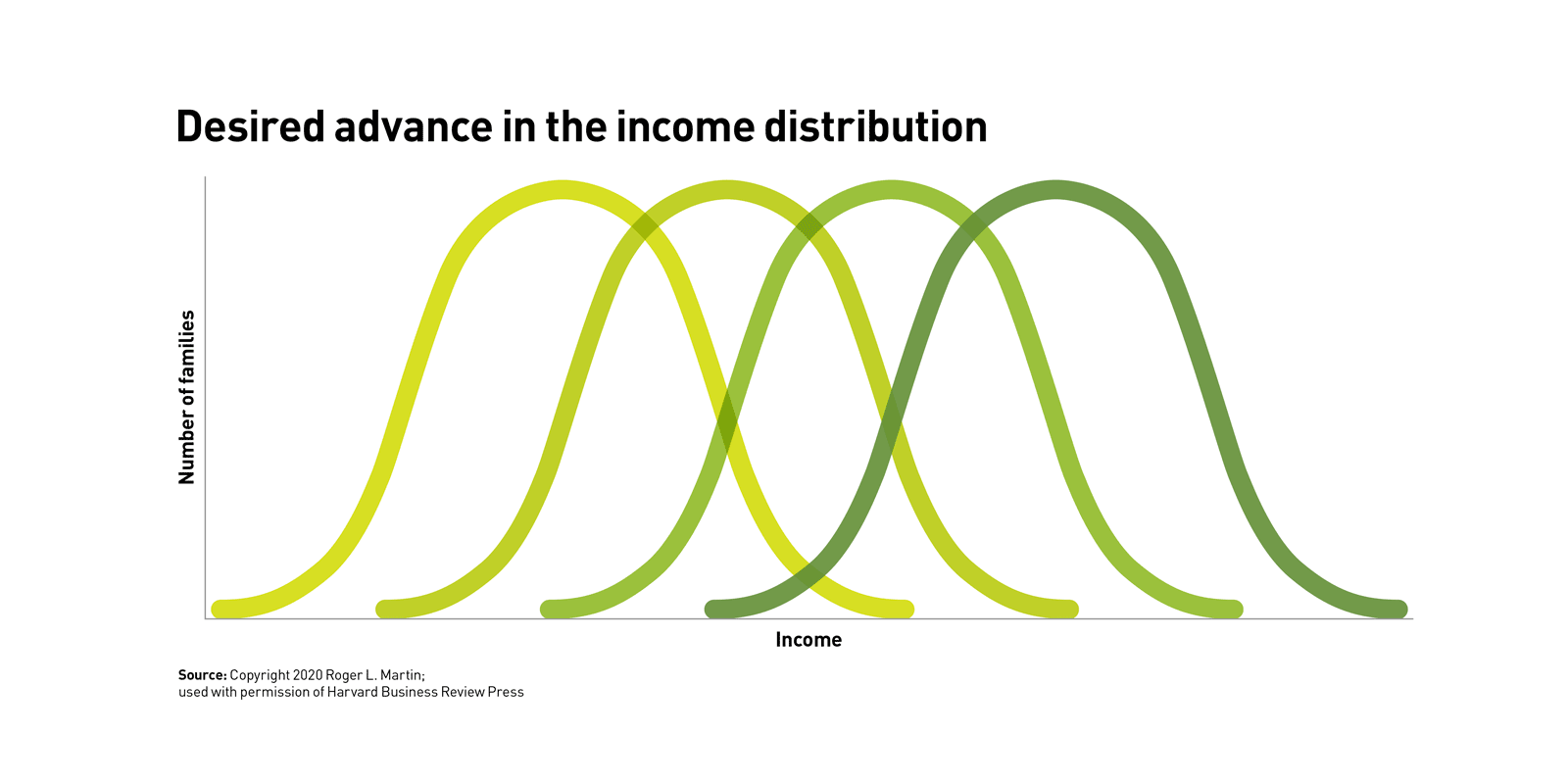

In the context of democratic capitalism, we would expect a largely bell-shaped distribution of economic outcomes, such as those related to income and wages, and that the largest part of the population would be the “middle class.” The model for efficient economic growth would be the distribution of income moving steadily to the right over time (see “Desired advance in the income distribution”).

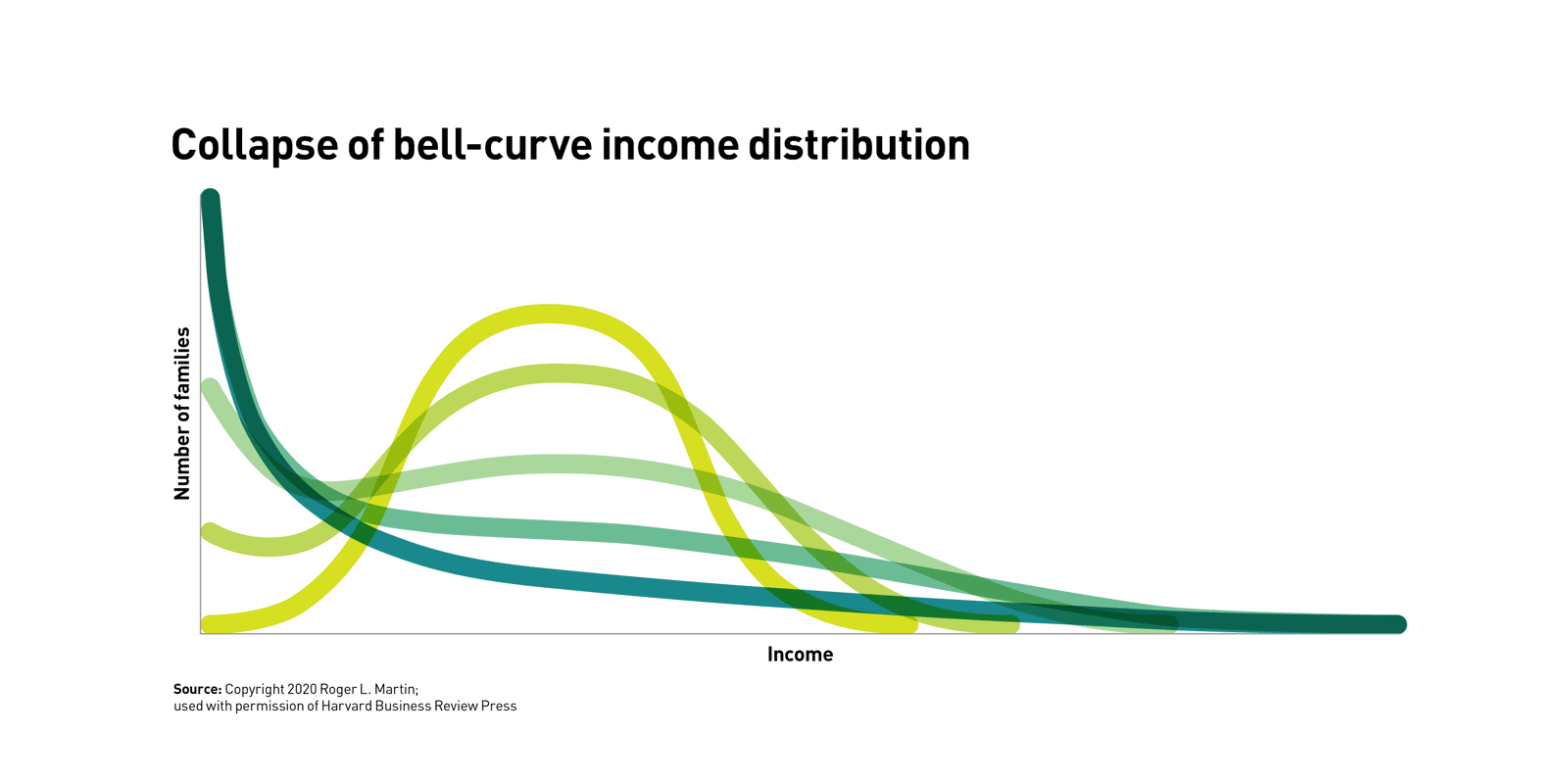

But our economic machine isn’t producing either the assumed distribution or the favorable movement, and it hasn’t been for some time. Instead, the bulge in the middle is slowly but surely shrinking, and the prosperity of a vast majority of families is no longer moving smartly upward. What we are seeing is a collapse of the bell-shaped income distribution, in which we see more wealth concentrated at the very top of the income range (see “Collapse of bell-curve income distribution”).

This collapse can be compared to a sand pile, on top of which more grains of sand are dropped. The pile collapses catastrophically as the pressure applied by earth’s relatively strong gravity accelerates that final grain down with enough force to jar enough other grains out of position to drive a total collapse. For the U.S. economy, efficiency is gravity’s equivalent. We are adding pressure due to opening of trade, deregulation, capital markets consolidation, and other factors. As a result, we see increasing consolidation as more and more industries turn into oligopolies or monopolies, with outsized rewards for the few as the median family in the economy stagnates.

Without a fundamental shift in how we manage the economy, outcomes will only get more out of alignment with our hopes and assumptions. To understand why this is happening, we need to reexamine our theories about what improves the economy. Rather than striving singularly for ever more efficiency, we need to strive for a balance between efficiency and a second feature: resilience.

A system is resilient to the extent that, over time, it can adjust to its changing context in ways that allow it to continue functioning and delivering its desired benefits. In the depths of the Great Depression, American democratic capitalism was resilient. It shifted, adjusted, and adapted to shocks to its very core, but it maintained the combination of democracy and capitalism. In many other developed countries, democratic capitalism was not sufficiently resilient to survive and was eventually replaced by fascism or communism. The recent inattention to the resilience of our democratic system — thanks to the singular and obsessive pursuit of efficiency — has jeopardized that combination.

Nonresilient systems tend to die explosively. The U.S. capital markets blew up spectacularly between 2008 and 2009 and could well have gone extinct had it not been for intervention by the federal government. In 2011, in Japan’s Fukushima nuclear reactor disaster, two of the reactors, lacking resilient systems, melted down, and then the buildings housing them exploded, spewing enormous amounts of radiation into the region. The latest estimate of the cleanup costs alone is more than US$600 billion.

In contrast, inefficient systems tend to fade away slowly, as systems with superior fitness replace them. There is no way to guarantee the resilience of a system that doesn’t pay attention to efficiency. It might appear to be resilient, but it will eventually be overwhelmed by a more efficient adversary. Authorities could have wrapped the Fukushima nuclear facility in layer upon layer of redundancy to make it particularly resilient. But the cost would likely have been so high that it would have made the power produced economically unviable.

To achieve the delicate but more desirable balance between efficiency and resilience, we need to explicitly retire the machine model of the economy and consciously adopt the model of a natural system. Design principles for achieving this balance therefore have to take account of the three core features of a natural system: its complexity, its adaptivity, and its systemic structure.

Design for complexity

The key challenge of complexity within a natural system is that the operation of a complex system is so opaque and inscrutable that it is not feasible to predict performance with even reasonable accuracy, as illustrated by economists’ forecasts of both America’s 2008 to 2009 economic performance and the consequences (or lack thereof) of the January 2013 “fiscal cliff.” This means that forecasting just how much pressure a system can bear and then applying that exact amount of pressure is a more dangerous game than we imagine. While pressure can produce more efficiency, when applied without constraints, it can cause a collapse of the bell-shaped distribution of economic outcomes.

To achieve the delicate balance between efficiency and resilience, we need to explicitly retire the machine model of the economy and consciously adopt the model of a natural system.

The goal in designing a system, therefore, is to balance pressure for more efficiency with friction to limit its damaging extremes. This, I must emphasize, is not a radical or new concept. The voluntary imposition of limits and constraints in the interest of prolonging the life span of a system or economic agent is a survival strategy as old as humanity itself. Let me illustrate the deliberate introduction of friction in the context of America’s most iconic sport: baseball.

Baseball pitchers live in constant danger of overstressing their pitching arm to the point of career-ending injury. And in the complexity of elite pitching, it is hard to figure out when it might happen and, when it does, why. Clearly, some of it is genetic and some is random. But one factor that has come into focus is the number of pitches a starting pitcher throws in a given game. It is not the first 80 pitches of the game that are likely to overstress a pitcher’s arm: It is the last 30 pitches in a 110-pitch game. But an aggressive pitcher will likely feel he can pitch forever — and an aggressive coach will be very tempted to believe him, especially in a crunch game. And that is precisely why in modern baseball, there are pitch-count restrictions (and innings-per-year restrictions) imposed on pitchers by their teams.

These restrictions arguably hurt efficiency. Other things being equal, the team would want its best pitcher to pitch more innings of more games. And for a while, that might pay off. But with each game, the risks would rise that the pitcher’s arm would be overstressed, forcing the pitcher to bow out for a protracted period — if not forever. Pitch-count restrictions make it more likely that the pitcher will be able to bounce back from each pitching start and will perform well for the entire year, and the next, and the next. I find it useful to think about pitch-count restrictions as a productive friction. By limiting the use of a particular resource or system component (the pitcher), the life and usefulness of that resource are prolonged, to the overall benefit of the system.

The balance of pressure with friction is not limited to the world of sports. The U.S. Federal Reserve Board, for example, uses interest rates to apply friction. If the Fed feels that the economy is heating up too much and is in danger of creating an economic bubble or inflation, it can use monetary tightening to push interest rates higher — creating friction intended to slow growth. The proposed Tobin tax on currency trading, first proposed in 1972, was explicitly viewed as an imposed friction that would slow down the most dramatic and damaging swings in currency movements. By adding a cost to the execution of each trade, currency traders would be discouraged from trading in an unfettered way that would drive down the currency of a given country.

The principle isn’t to eliminate the pressure for efficiency. That would be equally disastrous. The principle is to create friction that ameliorates the damaging extremes of pressure.

Design for adaptivity

In a complex adaptive system, causal relationships among elements in the system aren’t stable. By the time we decide what to do, it is quite possible, if not likely, that the system has changed in a way that renders our decision obsolete. And by the time we have figured that out, the system will have changed again. Because of that adaptivity, our design principle must be to balance the desire for perfection with the drive for improvement.

In a machine model, the pursuit of perfection makes sense. Analyzing the machine in every detail makes it possible to understand how to maximize its performance. Once performance has been maximized, any failure is likely to be interpreted as pilot error or not giving the machine enough input or time. This is what philosophers call a justificationist stance. There is a perfect answer out there to be sought, and when that perfect answer is found, the search is over. The task then turns from searching for the perfect answer to protecting the perfect answer. It feels noble to aim for, fight for, and protect perfection.

However, in an adaptive system, there is no perfect destination; there is no end to the journey. The actors in the system keep adapting to how it works. In nature, this happens reflexively, as with a tree that turns to the sunlight due to the force of nature, and by growing taller obscures the sunlight for those in its increasing shadow. In the economy, adaptation happens reflectively. People take in the available inputs and make choices, and those choices influence the choices and behaviors of the other humans in the system.

This means that players will try to game any change in a system the moment that change is put in place. It is inevitable. If you are offered a bonus for achieving your sales budget, you will work hardest not to sell more of the product or service in question but rather to negotiate the lowest possible sales budget to make achieving it easiest and most likely. Attempting to prevent gaming with an inspired design is a fool’s errand. Gamers will exploit whatever solution is in place, and sooner or later, the solution will become dysfunctional.

So, although the pursuit of perfection may seem like a noble goal, in a complex adaptive system it is delusional and dangerous. In a cruel paradox, seeking perfection does not enhance the probability of achieving said perfection. In a complex adaptive system, it is not possible to know in advance the organized, sequential steps toward perfection. Guesses can be made. Better and worse vectors can be reasonably chosen. But perfection is an unrealistic direct goal, with the problematic downside of creating a paradise for gamers. As justificationists staunchly defend a system they perceive to be perfect, gamers are only given more time and space to enrich themselves at the system’s long-term expense.

Hence, we must balance the understandable desire for perfection with an incessant drive for improvement. Given the impossibility of finding anything resembling a permanent fix, we need to engage the system not in a spirit of periodically and dramatically fixing it — despite the political attractiveness of seeming to offer “the answer” — but rather with the intention of tweaking it on a continuous, incremental basis. Those tweaks will never be perfect. And an error is not a bug: It is a feature, an important and inevitable signal that it is time to initiate another intervention. The experience of error is consistent with the march toward ever-better answers — ever-better models. Even the cleverest designs will be gamed. Like the economy, software works as a natural system in this respect: Hackers will always hack, and patches will always be needed — again and again and again.

Design for systemic structure

Recall that in a natural system there are numerous interdependencies. The core design principle with respect to the systemic structure is to balance connectedness with separation. As with the overall balance between efficiency and resilience, with the balance between pressure and friction, and with the balance between perfection and improvement, there is danger on both sides of the desirable path.

Interdependence amplifies the pressure effect of efficiency. It’s no surprise, therefore, that the transformation is accelerating from a bell-shaped distribution of economic outcomes to a collapsed distribution with more wealth at the very top of the economic pyramid. We are currently connecting more and more things, in more and more tightly coupled ways. The internet of things (IoT) is the latest generation of enhanced connectedness. Untold billions of devices will be connected to provide real-time information, computer to computer. Systems everywhere are becoming tightly coupled. Lots about it is good, indeed very good. A connected world is more efficient. A connected world drives out transaction costs and unnecessary rework. Humans are already tightly connected at fractional costs; now machines will be too, and machines to humans, and vice versa.

But tightly coupled systems can fail catastrophically. In 2003, the entire U.S. Northeast and central Canada experienced a power blackout because a single power line in Ohio came in contact with a tree branch. This relatively minor fault cascaded through the tightly coupled system, and, once it hit a critical stage, 265 power plants went off-line in about three minutes. It took a week to put that particular Humpty-Dumpty back together, during which tens of millions of people and their businesses went without electrical power.

The solution for avoiding this sort of calamity is not full separation. It is to attempt to understand and benefit from connectedness but balance it with separation, because when outcomes are interdependent, effects become the causes of more of the given effect, and the proverbial sand pile collapses. That is precisely why tightly coupled systems are prone to total meltdowns. The most direct way to reduce dysfunctional connectedness is to install firebreaks, an idea that comes from forest management. In a dry season, a bolt of lightning may set a tree ablaze, and if there is a strong wind and all the trees are dry, the fire can spiral rapidly out of control. To reduce the risk of losing commercial timber to fire, forest managers introduce gaps across which the fires cannot easily pass, whether those gaps are an existing road or a stream or a path that is bulldozed to impede a forest fire already underway. Doing this creates productive separation in a system that is too tightly coupled to exhibit the requisite resilience.

Productive separation has long been a tool in financial regulation. The Glass–Steagall Act of 1933, which was part of the New Deal legislative agenda, was designed to create a firebreak between the banking and securities industries after the crash of 1929. Some historians do not believe that the crash of 1929 was driven by commercial banks participating excessively in the securities business, but that was certainly the assumption of the framers of Glass–Steagall, which prevented commercial and investment banking institutions from venturing into each other’s businesses. The idea was that if one of the industries experienced a meltdown, the other would remain relatively unaffected. In 1956, the Bank Holding Company Act plowed a further firebreak, between banking and insurance.

These firebreaks were removed starting in the 1980s in the spirit of efficiency and connectedness. The theory was that a firm that spanned these industries would be able to serve customers in a broader and more seamless fashion and would therefore be a more efficient delivery mechanism. Once again, experts disagree on the consequences of the removal of this firebreak. Some view the removal as a factor contributing to the global financial crisis. Others view it as not relevant. It is never possible to be certain in such a complex adaptive system, but it is clear that adaptation of a sort not necessarily anticipated by those removing the firebreaks ran rampant.

Another bit of productive separation is the circuit breaker system used today on most stock markets. The idea of the circuit breaker was spurred by the October 19, 1987, fall of 508 points in the Dow Jones Industrial Average. At the time, it was the biggest absolute (though not percentage) drop in history. The problem was that the effect (dropping stock prices) became the cause (I should sell now before the market drops further) of more of the effect (dropping stock prices) and so on, for 508 points of decline. The tight connection of instant data and instant reaction producing more data and more instant reactions had to be separated. That was achieved by way of a circuit breaker. Following a drop of predetermined magnitude, trading would be halted to give the participants a chance to think more carefully, so that they wouldn’t simply react as fast as possible in order to get their trades in before others and would therefore not lose so much.

As you work to strike a balance, be aware that connectedness is an inherent property of natural systems and a source of efficiency, which needs encouragement as much as resilience needs protection. It is important to look out for and recognize these connections, because failing to recognize them means that you run the risk of a reductionist’s trap: not recognizing when dysfunction in one part of the system may spread to another part. A further complication is that many important connections hide in plain sight.

Designing to avoid an underappreciation of connectedness (i.e., too much assumed separation) is tricky. The complexity element of a complex adaptive system ensures that it is not a straightforward task to identify all the relationships in the system. Systems-theorist professor John Sterman provides useful perspective: “There are no side effects — only effects. Those we thought of in advance, the ones we like, we call the main, or intended, effects, and take credit for them. The ones we didn’t anticipate, the ones that came around and bit us in the rear — those are the ‘side effects.’”

It would be unhelpful to admonish designers to figure out all the effects in advance so that the system doesn’t fail because of “side effects,” since there will always be unexpected effects. On September 11, 2001, the majority of large organizations located in lower Manhattan had carefully designed backup telecommunications facilities to take over in case their primary one failed. However, none of those organizations took into account that the majority of the redundant communications cables in lower Manhattan ran directly under the World Trade Center and would be wiped out when the Twin Towers collapsed. To ask the designers to anticipate a terrorist attack that would destroy New York’s two biggest buildings is entirely unrealistic.

But it is not unrealistic to ask designers of systems to pay close attention to unexpected effects when they appear, rather than ignore them because they don’t fit the model. I learned an important lesson in this when I studied what helped Dr. Stephen Scherer become one of the world’s preeminent researchers of autism spectrum disorder and genomics. For Scherer, anomalies are a treasure trove: “My belief is that answers to really difficult problems can often be found in the data points that don’t seem to fit existing frameworks. To me, those little variations are like signposts saying: ‘Don’t ignore me!’”

Scherer may be in the minority in terms of keeping an eagle eye on effects that don’t comport with the model of the day, but he isn’t alone in doing so. Sometimes the financial regulators do pay attention. When the consensus estimates of analysts and their buy/hold/sell recommendations became more central to the capital markets after the passage of the Private Securities Litigation Reform Act, regulators noticed that the analyst ratings were getting ever more dramatically weighted toward “buy” recommendations. Why would that be? Well, it was very helpful to the analysts’ colleagues in the investment-banking department, when attempting to sell business to a given company, if there was a positive, optimistic buy recommendation out on the stock of that company. While regulators had hoped that the analysts would do rigorous evaluation of each company’s stock and provide an unbiased recommendation, it turned out that there was an unforeseen connection between the hopes and desires of the investment bankers and the preponderance of buy recommendations on the part of their analyst colleagues.

In 2002, in response to understanding this connection, the Securities and Exchange Commission imposed Financial Industry Regulatory Authority (FINRA) Rule 2711 mandating that “a member must disclose in each research report the percentage of all securities rated by the member to which the member would assign a ‘buy,’ ‘hold/neutral,’ or ‘sell’ rating.”

It is unlikely that this has fixed the problem entirely, but at least it acknowledges the connectedness where it was shown both to exist and to produce problematic outcomes. In these ways, designing for systemic nature can create productive separation where tight coupling threatens to collapse the distribution of economic outcomes and can adjust for nonobvious connectedness that would otherwise produce equally problematic outcomes.

Agendas for change

As we embark on our journey to repair our broken system, we need to recognize that no single actor can create the necessary shift on his or her own. Unsurprisingly, in this complex system, there are many important and interrelated actors. All the stakeholders in democratic capitalism have their parts to play. Business executives need to embrace that they are participating in a complex adaptive system rather than operating a machine — and manage accordingly. Political leaders need to recognize both that their job is not to promote unalloyed pursuit of efficiency and that they are overseeing a complex adaptive system rather than a perfectible machine. Educators need to understand that their job is to prepare graduates to operate effectively in a complex adaptive system — and teach accordingly. Citizens have to take on the assignment of aiding the functioning of the complex adaptive system by enforcing more friction in order to encourage more resilience. Really, there is no excuse to not get started. The downside of the status quo is staring Americans in the face. But, collectively, we can restore balance if we just get started, stay reflective, and tweak relentlessly.

Author profile:

- Roger L. Martin is a professor emeritus at the Rotman School of Management at the University of Toronto, where he served as dean from 1998 to 2013, and as institute director of the Martin Prosperity Institute from 2013 to 2019. In 2017, he was named the world's number one management thinker by Thinkers50.

- Reprinted by permission of Harvard Business Review Press. Adapted from When More Is Not Better: Overcoming America’s Obsession with Economic Efficiency. Copyright 2020 Roger L. Martin. All rights reserved.