Siri, Who Is Terry Winograd?

For 40 years, the Stanford professor has steered the increasingly complex and meaningful interactions between humans and computers.

A version of this article appeared in the Spring 2017 issue of strategy+business.

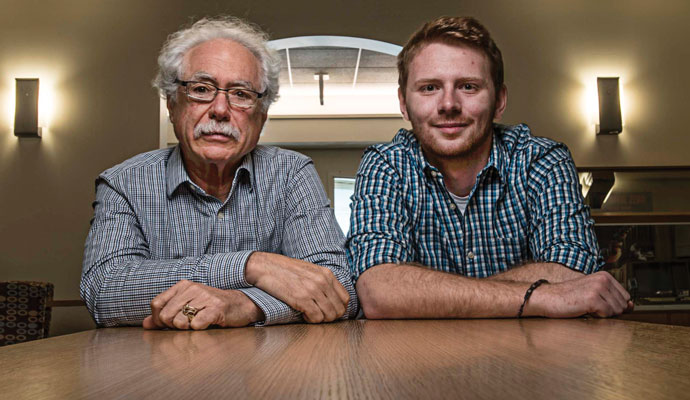

On the Stanford University campus, you could practically throw a rock and hit 100 graduate students who are building apps that enable people to communicate more effectively. But Terry Winograd is particularly enthusiastic about the app one of his graduate students, Catalin Voss, is working on. Voss, a native of Germany who completed his bachelor’s and master’s degrees last June at the age of 21, is working on an app that deploys Google Glass, linked to a smartphone, to help autistic children recognize human emotions through facial expressions.

Venture capitalists weren’t interested, even though Voss had created and sold a startup that used eye-tracking technology to monitor attentiveness to a Toyota subsidiary while still a freshman. But Terry Winograd was interested. “It runs, it has AI [artificial intelligence],” says Winograd, who 20-odd years ago advised another graduate student on the then nascent field of searching the World Wide Web. “It’s at a stage where we’ve actually put 30 devices into homes. Our goal is to have 100 in the trial.”

Voss says his objective is to build a medical product that insurers will be willing to pay for. “We want to prove the investors wrong, who didn’t believe in it, and build an aid for people with autism, and other mental disorders as well,” he says. “We believe we’ve built a fairly holistic system for mental health.”

Winograd was Voss’s first choice for an advisor even though the 70-year-old professor retired from teaching three years ago. (He continues to advise graduate students, without pay.) “I knew Terry from my freshman year at Stanford,” Voss says. “He’s known for all the fantastic work he’s done, but even more known for the people he’s advised.” Among them are Silicon Valley aristocrats such as Google cofounder Larry Page and LinkedIn founder Reid Hoffman.

“Terry has such a broad world view. One day he’s in Jerusalem with members of the U.S. Congress working on peace, the next writing a book, the next sitting in his office talking to me about my problems,” Voss continues. Winograd connected Voss with the Wall Lab at Stanford’s medical school, which is housing the research. “When you take them to Terry, suddenly they don’t seem like problems anymore,” Voss added. “He’s a technology expert, Ph.D. advisor, and personal therapist all in one.”

An app to help autistic individuals relate better to other people is classic Winograd. He cares deeply about the human side of human–computer interaction, and especially about harnessing the power of technologies such as artificial intelligence to augment human capabilities rather than to supplant them. Although Winograd has nothing against powerful algorithms, he wants them to reflect and incorporate the complexity of being in the world, of being human and using language.

Indeed, few people have been as important in the development of our hyperconnected, smart-machine world as Terry Winograd. As a graduate student at MIT in the late 1960s, Winograd wrote one of the seminal programs in the emerging discipline of artificial intelligence. But after he moved to California and became more deeply immersed in philosophy and the real-world experience of machines, Winograd reached a conclusion that marked him as something of an apostate. Intelligence wasn’t simply a matter of pattern recognition and processing data; it involved being and existing. And as a result, computers alone couldn’t possess true intelligence. The proper role of artificial intelligence was therefore to help humans live more fully human lives, not to replace them. He became, in the words of historian and New York Times technology writer John Markoff, “the first high-profile deserter from the world of AI.”

In a remarkably fruitful career that has spanned four decades, the “deserter” founded Stanford University’s graduate program in human–computer interaction, created programs in symbolic systems and liberation technology, and, with David Kelley of IDEO and colleagues in several departments of the engineering and business schools, cofounded the Hasso Plattner Institute of Design at Stanford, better known as the d.school. Along the way, he became the advisor of choice for second-generation Silicon Valley. A classic Fortune magazine cover shows Larry Page with a broad smile and the caption, “The Best Advice I Ever Got.” That was Winograd’s suggestion that he work on Web search. Hoffman credits Winograd with steering him down the path that led to the founding of LinkedIn. Countless other successful engineers and entrepreneurs credit him as a mentor nonpareil. Mike Krieger, for example, the chief technology officer and cofounder of Instagram, was a Winograd advisee, as was Sean White, who heads up technology strategy for Mozilla.

“I’m often asked what the strongest thing about Stanford University is, and the answer is the students it attracts,” Winograd says. “The reputation gets people here who are going to do big things whether you meet them or not. My whole concept is to understand the context, not how you write the algorithm. Think about the people, not the technology.”

“My whole concept is to understand the context, not how you write the algorithm. Think about the people, not the technology.”

Since its inception, AI’s development has traced a sine wave, with peaks of achievement and elation followed by troughs of stasis and despair. In the so-called AI winter of the mid-1980s, when I started writing about technology for the New York Times, my editors wanted only stories about why AI had failed. Now, as headlines hype self-driving cars, robotic surgery, and sex droids, it’s springtime for AI again. Funding in AI startups more than quadrupled between 2011 and 2015, to US$681 million from $145 million, and it could reach $1.2 billion in 2016, according to CB Insights. An anti-AI backlash is also under way; multiple new books are predicting a dystopic jobless future, and even celebrity techies such as Tesla founder Elon Musk are warning that AI could be the greatest danger facing humanity.

Winograd’s calm, grandfatherly demeanor is a welcome respite in this overheated milieu. Soft-spoken, with a halo of white curls around a friendly face, Winograd is less Han or Luke, Darth or Obi-Wan — more Yoda. He has authority and understands how things work at the most fundamental bits and bytes level, but is neither thrilled nor overly concerned about AI’s potential. AI could do a few things in the 1970s, and it can do more things now, but it is still up to humanity whether AI will be a force for good or ill.

Understanding Natural Language

In a lecture about human–computer interaction, or HCI, Winograd shows a slide of a researcher operating a replica of the Babbage Engine. The mechanical calculating machine built in London in the early 1800s is widely considered the first computer, even though Charles Babbage’s original prototype never worked properly. In the photo, the researcher has his hands deep in the guts of the working replica, which weighs five tons and has more than 8,000 parts. This is visceral HCI.

Winograd flashes forward to a picture of the first computer he ever touched, a Control Data Corporation 160, which he encountered as one of the few math majors at Colorado College in the 1960s. It was about the size of a desk, small for its day, and Winograd’s interaction with it was nearly as physical as that of the Babbage operator. “I wrote a program for it, along the way reinventing various wheels that I didn’t know existed in computing,” he recalls. “I literally programmed it by pushing the console buttons; I didn’t know they had assemblers,” programs that convert basic computer instructions into a pattern of bits that the computer’s processor can use.

Winograd’s father had a B.S. in electrical engineering, though he made his living in the steel business, and his mother was a “civically involved” homemaker who went back to college in the late 1960s to get a degree in sociology. Growing up in Greeley, Colo., Winograd was interested in science, technology, math, and tinkering. “I did things like building a Heathkit amplifier and hooking up a Model T coil to doorknobs to shock my brothers.” His high school science project was designing and building a primitive computer.

After graduating in 1966, Winograd received a Fulbright scholarship and pursued his other interest, language, earning a master’s degree in linguistics at University College London. He applied to MIT, where Marvin Minsky had built one of the hubs of artificial intelligence — the then fanciful idea that a machine could mimic human cognition, by learning and solving problems that appeared to require conscious thought. MIT was also then home to Noam Chomsky, who posited a privileged place for language — and linguistics — as the key to a true science of human cognition, stemming from a structurally unique language faculty that could not be reduced to general intelligence.

The Minsky and Chomsky camps had little to say to each other. And although Winograd’s mix of interests might have made him a bridge between these two shores, it was not to be. “I took one class from Chomsky, and he flunked me,” Winograd recalls. “If you told his people you were from AI, they would walk away from you.”

Winograd soon became a star of the AI department, working directly with Minsky and Seymour Papert, co-inventor of the Logo programming language, an early tool to help children think and solve problems. He claimed his place in the firmament when he developed SHRDLU, which allowed a user to interact with a computer using natural language. The name came from ETAOIN SHRDLU, the first line of keys on a linotype machine. A typical SHRDLU exchange looked like this:

PERSON: Pick up a big red block.

COMPUTER: OK.

PERSON: Grasp the pyramid.

COMPUTER: I DON’T UNDERSTAND THAT PYRAMID YOU MEAN.

PERSON (changing his mind): Find a block which is taller than the one you are holding and put it into the box.

COMPUTER: BY “IT,” I ASSUME YOU MEAN THE BLOCK THAT IS TALLER THAN THE ONE I AM HOLDING.

PERSON: What does the box contain?

COMPUTER: THE BLUE PYRAMID AND THE BLUE BLOCK.

PERSON: What is the pyramid supported by?

COMPUTER: THE BOX.

PERSON: How many blocks are not in the box?

COMPUTER: FOUR OF THEM.

PERSON: Is at least one of them narrower than the one which I told you to pick up?COMPUTER: YES, THE RED CUBE.

PERSON: Is it supported?

COMPUTER: YES, BY THE TABLE.

PERSON: Can the table pick up blocks?

COMPUTER: NO.

If that doesn’t seem very exciting, remember that in 1968 human–computer interaction consisted of punched cards and printouts, with a long wait between input and output. To converse in real time, in English, albeit via teletype, seemed magical, and Papert and Minsky trumpeted Winograd’s achievement. Their stars rose too, and that same year, Minsky was a consultant on Stanley Kubrick’s 2001: A Space Odyssey, which featured natural language interaction with the duplicitous computer HAL.

Inevitably, there was a backlash. Some computer scientists said SHRDLU’s parameters were so narrow that it was a leap too far to call it intelligent. SHRDLU “was just very constrained; there were very few words you could use,” says Ben Shneiderman, a professor of computer science at the University of Maryland. “It had the illusion of broader capabilities, but it was really restricted to just a few words and concepts. It had that usual aspect of demos where what you see is very good and you assume it can do a lot more, but it doesn’t.”

Nowadays, Winograd calls that “a perfectly legitimate critique.” But Minsky and Papert brooked no criticism. Minsky had already announced, “Within a generation, the problem of creating ‘artificial intelligence’ will be significantly solved,” and held up SHRDLU as an example. The narrow domain of SHRDLU and other early AI programs constituted “micro worlds,” Minsky and Papert wrote. And although there would be many micro worlds, a computer with enough memory and processing power could understand them all.

The most scathing critique came from an unexpected quarter: Hubert Dreyfus, a philosopher and Heidegger scholar, who had recently left MIT for the University of California at Berkeley. In a series of papers and books, Dreyfus blasted AI research in general and SHRDLU in particular for a grave misrepresentation of the very nature of understanding. He pointed out that the programs had repeatedly failed to understand simple children’s stories.

“The programs lacked the common sense of a four-year-old, and no one knew how to give them the background knowledge necessary for understanding even the simplest stories,” Dreyfus wrote in What Computers Still Can’t Do: A Critique of Artificial Reason (MIT Press, 1972). “An old rationalist dream was at the heart of the problem. GOFAI [good, old-fashioned artificial intelligence] is based on the Cartesian idea that all understanding consists in forming and using appropriate symbolic representations.”

Dreyfus, then dubbed the Dark Knight of AI, said his study of philosophers such as Heidegger, Maurice Merleau-Ponty, and, later, Ludwig Wittgenstein supported his intuition that the symbolic representation and micro worlds of early AI would inevitably fail. He was correct in that prediction; today’s successful AI programs use different technology. But he probably couldn’t have predicted that he and Terry Winograd would later become friends.

Understanding Computers and Cognition

In the early 1970s, the three loci of artificial intelligence development were Carnegie Mellon, where Herbert Simon and Allen Newell invented the field in the 1950s; MIT, where Minsky and Papert held sway; and Stanford, where John McCarthy created SAIL, the Stanford Artificial Intelligence Laboratory, in the foothills behind the university. The three labs had very different approaches, reflecting their founders’ different mind-sets. “Simon at Carnegie had received a Nobel for economics, Newell had done research in cognitive psychology, and they had the idea that AI would enable understanding of human cognition,” Winograd recalls. “McCarthy at Stanford was a mathematician. His view was that intelligence was basically a kind of logic. MIT was the realm of the hackers. Minksy’s idea of intelligence was that it was just a lot of bits and pieces of code that evolution has hacked together over the years.”

Winograd left MIT for Stanford in 1973. His wife, Carol, had just completed her medical degree and landed a residence at San Francisco General Hospital. Stanford happened to have open a one-year position filling in for a professor on leave who specialized in natural language. That professor never returned; Winograd stayed for 40 years.

But it was the labs at the nearby Xerox PARC, the copier giant’s Palo Alto Research Center, that fired Winograd’s imagination. “Stanford was a home, Stanford was students, but it wasn’t really an intellectual center for what I was doing,” Winograd says.

Those were heady times at PARC. The Xerox Alto, the first computer with a graphical user interface, was launched in March 1973. Alan Kay had just published a paper describing the Dynabook, the conceptual forerunner of today’s laptop computers. Robert Metcalfe was developing Ethernet, which became the standard for joining PCs in a network. Winograd, with characteristic self-effacing humor, says, “You know all those cool things invented at PARC? I didn’t work on those.”

He did work on KRL — knowledge representation language, a branch of AI that incorporates findings from psychology about how humans solve problems and represent knowledge. It didn’t go well, in part because the machines available weren’t sufficiently powerful to do the necessary computing work.

But in 1976, Winograd met Fernando Flores, and his life changed. Flores has that effect on people. A former minister of trade under Chile’s socialist president Salvador Allende, Flores spent three years in prison following the coup led by Augusto Pinochet in 1973. Plucked from the gulag by Amnesty International, Flores landed in the computer science department at Stanford because one of his rescuers had a position there. He had spent his prison years reading philosophy books smuggled in by friends and family, and emerged steeped in phenomenology, the study of the structures of experience and consciousness.

Flores and Winograd began attending a series of informal lunches at Berkeley, led by Dreyfus and John Searle, another bête noire of the AI crowd, who had published a famous takedown of Alan Turing’s test for artificial intelligence. The conversations were wide-ranging, and no efforts at conversion were made, but Winograd emerged from them with a new understanding of cognition as a fundamentally biological phenomenon.

Winograd’s embrace of phenomenology pushed him toward a firmer grasp of AI’s limitations. Winograd and Flores began work on a book — Understanding Computers and Cognition: A New Foundation for Design (Ablex). It took them 10 years to complete, but it has never been out of print since its publication in 1986 and is still earning rave reviews on Amazon, some presumably from readers not yet born when it was written.

The book draws from three inspirations. The first was Heidegger’s concept of cognition. Cognition, he declared, was not based on the systematic manipulation of representations, but was an artifact of dasein, of being in the world. The second inspiration came from the Chilean biologist and philosopher Humberto Maturana, who had been among those smuggling books to Flores in prison. In The Tree of Knowledge: The Biological Roots of Human Understanding (New Science Library, 1987), Maturana and his coauthor, Francisco Varela, made the case that cognition is not a representation of the world, but rather a “bringing forth of the world through the process of living itself,” and that “we have only the world that we can bring forth with others.”

The third inspiration was John Searle’s concept of speech acts, a theory first articulated by Cambridge University professor J.L. Austin in a series of lectures published posthumously in 1962 as How to Do Things with Words (Oxford/Clarendon Press). Winograd and Flores use speech act theory as a starting point for an understanding of language as an act of social creation. Foreshadowing a world to come of groupware and social media, they write that most communication between individuals consists not of information, but of prompts for action: requests, offers, assessments.

Understanding Computers and Cognition doesn’t really attempt to synthesize these three big ideas. But it draws upon them to arrive at a conclusion that, at the time it was published, read as heresy in certain circles: “We argue — contrary to widespread current belief — that one cannot construct machines that either exhibit or successfully model intelligent behavior.”

This was not an argument calculated to win favor at Stanford, where McCarthy’s lab was an acknowledged world leader, heavily invested in the standard approach to artificial intelligence. “Terry was, of course, the bad boy,” recalls Hoffman of LinkedIn, who was then one of his students. “I had conversations with John McCarthy at the time, where John [said], ‘You know, Terry’s totally crazy, gone off the deep end, needs to be on his meds,’ etc.”

Putting the H in HCI

In his Machines of Loving Grace: The Quest for Common Ground between Humans and Robots (HarperCollins, 2015), a history of artificial intelligence, John Markoff recounts the schism between the devotees of artificial intelligence, such as Minsky, who quipped that robots would “keep us as pets,” and the community of intelligence augmentation, IA, whose members believed that computers should aid humanity, not supplant it. Foremost among the second group was Doug Engelbart at Stanford Research Institute, inventor of the computer mouse and of hypertext. Markoff sees Winograd as a critical link between AI and IA.

“Winograd,” Markoff writes, “chose to walk away from the [AI] field after having created one of the defining software programs of the early artificial intelligence era and has devoted the rest of his career to human-centered computing, or IA. He crossed over.”

But Winograd did not desert Stanford, his students, or the computer science department. Instead, he collaborated with faculty from a surprisingly diverse set of departments to create a new undergraduate major that combined engineering, the social sciences, and humanities: symbolic systems. The program, still going strong today, draws faculty from computer science, linguistics, philosophy, psychology, communication, statistics, and education. Its students pursue various occupations, including software design and applications, teaching and research, law, medicine, and public service. Stanford being Stanford, many graduates create startups.

Hoffman, the eighth student to enroll in the symbolic systems major in 1987, says the program saved him from having to create his own major, because grasping the confluence of computers, cognition, and communication called for Winograd’s interdisciplinary approach. The program, and Hoffman’s relationship with Winograd, shaped his life. “Terry, among other things, was probably directly responsible for me going to Oxford,” Hoffman says. “I became convinced that we didn’t understand what thought and language were, and that I had to go study philosophy in order to do this. I also probably wouldn’t have paid as much attention to human–computer interaction if it weren’t for Terry. In my very first real job, I was a contractor in user experience at Apple. It came from having given years of thought to how to do that well, from questions Terry was asking.”

Although teaching has always been Winograd’s favorite role, he continually pursued other endeavors. In the early 1980s, he wrote a textbook, Language as a Cognitive Process: Syntax (Addison-Wesley, 1982). He cofounded Computer Professionals for Social Responsibility, a group concerned about nuclear weapons, the Reagan administration’s Strategic Defense Initiative, and increasing participation by the U.S. Department of Defense in the field of computer science. And he took a year’s leave to help Flores create a startup, Action Technologies.

In 1986, Action Technologies launched a program called the Coordinator that organized office life in terms of speech acts. An email message had to be explicitly labeled as a “request” or an “offer,” and a meeting added to employees’ electronic calendars would be termed a “conversation for action” or a “conversation for possibilities,” depending on the intent. These actions were synchronized and linked across the network to facilitate scheduling and collaboration. Years ahead of its time, the Coordinator attracted a loyal following and influenced many subsequent groupware products, such as Lotus Notes.

“I became aware of the Coordinator, which to me was a real eye-opener, for the idea of using interpersonal communication in a structured way,” says Mitch Kapor, the founder of Lotus Development.

The Coordinator embodied Winograd’s human-centric approach. “He anticipated and was an early leader in refocusing the attention of people in computing professionally away from engineering and algorithms to taking up questions of how do computers serve humans well, or don’t,” Kapor says.

With the launch of the Macintosh, and Microsoft’s 1990 release of Windows 3.0, PCs spread beyond the original core of technology professionals and hobbyists. The term user-friendly entered the lexicon, and the look and feel of a software program suddenly mattered. The book Winograd edited in 1996, Bringing Design to Software (ACM Press), became a go-to text for developers.

During the same period, human–computer interaction began to evolve from the undertaking of a loose community of like-minded computer scientists to an academic discipline. HCI encompassed cognitive science, cognitive psychology, artificial intelligence, linguistics, cognitive anthropology, and philosophy of mind, a match for Winograd’s diverse preoccupations. He helped create Stanford’s HCI program in 1991.”

“I really wanted to start at the master’s level,” says Winograd. “[The HCI program] was always much more oriented toward people going out and doing good jobs as opposed to the next big research thing. Students had realized that understanding something about how people used computers was going to be valuable in their jobs. I also had a few Ph.D. students.”

Downloading the Web

One of Winograd’s Ph.D. students was Larry Page. Page’s parents were both in computer science, and he had actually met Winograd for the first time when he was 7 years old. They spoke again years later when Page was choosing between Stanford’s Ph.D. program and those of other universities.

“I had sort of researched all the people I could work with and I was surprised that there was basically nobody else in the world who I wanted to work with besides Terry,” Page said at the 20th anniversary celebration of Stanford’s HCI program. “He was a really solid computer scientist, he understood that side — but he also had a great passion around something that I wanted to work on that I thought was very important, which was HCI, something almost nobody else seemed to get. I think that’s still true. I’m amazed that people think computers are about computers and not about people.”

Page brought Winograd at least 10 different ideas for a dissertation topic, including self-driving cars and telepresence, both of which Google is now pursuing quite seriously. But both were beyond the resources of a graduate student, no matter how talented. Then Page got interested in search.

“At some point I woke up and I decided it would be easy, really easy, to download the Web, the entire Web, and just keep the links,” Page recalls. “Terry said, ‘Yeah, that’s a good one, go and do that.’… I’m very indebted to him.”

Characteristically, Winograd downplays his role, even though his is the third name, after Page and Google cofounder Sergey Brin, on the academic paper describing PageRank, the algorithm behind Google search. Unlike previous search engines, such as AltaVista, PageRank based its results not only on internal references to the search term, but also on links from other sites, created by humans using the content. The more links to a site people had built, the more relevant it probably was, much the way academic papers’ ratings are based on the number of times they are cited in other papers.

“Page and Brin were academic kids,” Winograd says. “They knew about academia even before they became Ph.D. students. So this idea of citations was in the back of their heads. The fact is that it wasn’t a very good dissertation project, but I said ‘Give it a try. I don’t see how you’ll turn this into a dissertation.’”

Page never did make a dissertation out of it. But he did create one of the world’s most valuable companies — Alphabet, Google’s parent, is worth more than $500 billion — as well as what Markoff calls the most significant “augmentation” tool in history. Page has often made time to lecture Winograd’s HCI classes. After one seminar, in 2000, Winograd suggested they collaborate on a book. Page quickly agreed and asked Winograd to take a year’s sabbatical to come work at Google.

Instead of working on a book, Winograd joined Google’s design team, a small group led by Marissa Mayer, then a fresh Stanford graduate. Among various projects, he worked on Caribou, which became Gmail, now the most used free email service in the world. He also became advisor to the company’s associate product managers, or APMs, as Google calls its new recruits. “I had half a dozen, maybe more, over time, basically grad students at Google,” Winograd recalls. “It was another opportunity to mentor, and I still have good relationships with a lot of them. They’ve done very well.”

So has Winograd. Half of his compensation at Google was in company shares, and the result has given Winograd and his wife the resources to donate generously to political campaigns and philanthropies that they care about. Many of these organizations are involved in forging peace between Israel and Palestine.

The Three Myths of AI

Winograd has no high-profile piece of technology to point to. In an age of software, it makes sense that his contributions are less tangible. He says his world view is most visible in Google’s many applications, not so much from his relatively brief tenure, but from the hundreds of his former students who work there. Many of them, he argues, have internalized his assumption that technology shouldn’t just work on its own, but that it should work for and with people.

“What are you producing for the person who’s using your system?” Winograd asks. “How do they experience it, not just in the sense of what do they see on the screen and so on, but what is the world you are creating that they’re then entering into by interacting with a device? Being conscious about that and then asking questions about what works and what doesn’t work is such a different point of view from the standard engineering approach to building interfaces.”

Heidegger used the term readiness-to-hand, and the example of a hammer. When you are hammering successfully, the hammer withdraws from your conscious awareness; it becomes transparent. Winograd uses the term fluency. When you speak your own language or are truly fluent in another, you don’t think about nouns, verbs, and tenses, you just speak. He aspires to that kind of transparency in HCI, and it’s not one size fits all. The keyboard interaction is still best for email, and the touch interaction of smartphones may be better for other things, such as finding a hookup on Tinder.

Smartphone interaction is evolving, as voice-controlled AI “assistants” — for example, Apple’s Siri and Microsoft’s Cortana — augment the touch-based interface. Winograd’s influence shows here as well. Even 20 years ago, he was demonstrating multimodal systems that combined huge screens with voice and physical manipulation.

“Siri is so often used as an example of AI today and progress, and maybe to some extent it kick-started this latest AI revolution, the ‘AI Spring,’” says Adam Cheyer, who developed Siri and is now chief architect of Viv, a second-generation digital assistant. “To me, Siri’s emphasis was purposely on the human augmentation side. Terry’s journey, from AI to IA, got me thinking maybe this is the right side to be on. Even in the very first version of Siri, there were elements of what Terry was working on at that time.”

Isaac Asimov first postulated his three fundamental “rules of robotics” in a 1941 short story, “Runaround,” which was set in 2015: “One, a robot may not injure a human being, or, through inaction, allow a human being to come to harm. Two, a robot must obey the orders given it by human beings except where such orders would conflict with the First Law. Three, a robot must protect its own existence as long as such protection does not conflict with the First or Second Law.”

Seventy-five years later, it seems to many that we are inhabiting a world of Asimov’s creation. Amid the startling real-world development, and the sometimes fanciful, sometimes apocalyptic musings of today’s futurists, it often seems as if software is eating the world and the machines are ascendant. Amid this gold rush, Winograd notes that three old myths have resurfaced: Robots will take our jobs; robotic sex will become a substitute for human intimacy; and (the big one) artificial intelligence will take over.

Winograd dismisses the first as simple Luddism and accepts the second as inevitable — “the robotics industry will find a market in robotic sex toys” — but he has a more nuanced view of the third myth. Our fate, he argues, relies less on machines and more on ourselves.

“The worry should be that we are choosing to give control to systems that don’t have human wisdom, human judgment,” he says. “We’re putting them into our infrastructure in a way which loses our control. It’s not because of the malevolent computers. It’s because we’ve decided we’re not going to bother. It’s more efficient to let the computer do it. Some examples are very mundane, like who gets loans. The algorithm decides who gets a loan. Are there any human considerations? Well, whatever was put in the algorithm. But then once that happens, you lose it. You don’t have a person in the loop.”

Whether it is perfecting the Google search algorithm or devising ways to more fully connect autistic individuals with their peers, Terry Winograd is still striving to ensure that people remain present in the infinite loop of code.

Reprint No. 17110

Author profile:

- Lawrence M. Fisher is a contributing editor of strategy+business. He covered business and technology for the New York Times from 1985 to 2000, and his work has also appeared in Fortune, Forbes, and Business 2.0. He lives near Seattle.